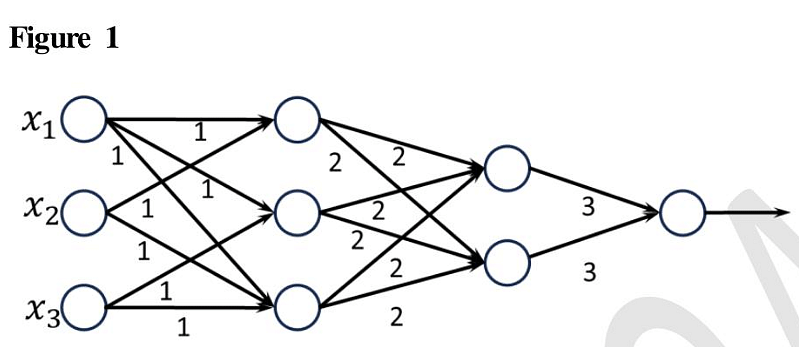

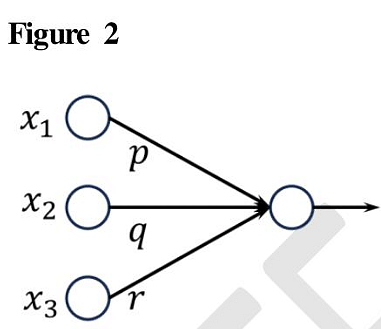

Consider the two neural networks (NNs) shown in Figures 1 and 2, with ReLU activation (ReLU(z) = max{0, z}, ∀z ∈ R). R denotes the set of real numbers. The connections and their corresponding weights are shown in the Figures. The biases at every neuron are set to 0. For what values of p, q, r in Figure 2 are the two NNs equivalent, when x

1, x

2, x

3 are positive ?