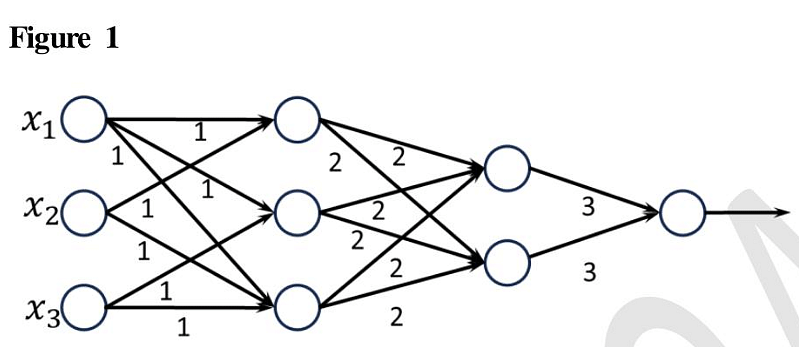

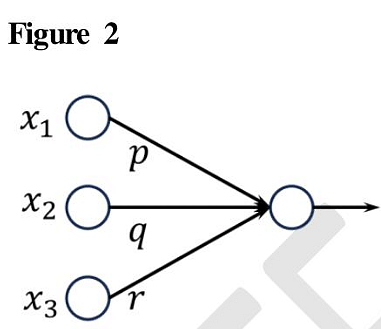

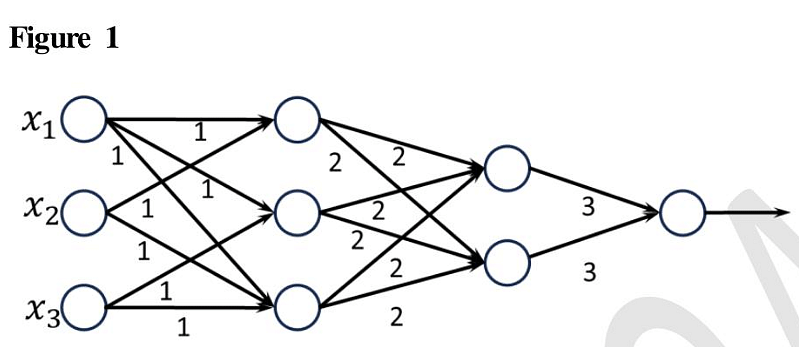

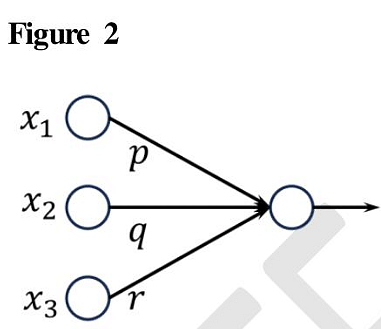

Consider the two neural networks (NNs) shown in Figures 1 and 2, with ReLU activation (ReLU(z) = max{0, z}, ∀z ∈ R). R denotes the set of real numbers. The connections and their corresponding weights are shown in the Figures. The biases at every neuron are set to 0. For what values of p, q, r in Figure 2 are the two NNs equivalent, when x1, x2, x3 are positive ?

- p = 36, q = 24,r = 24

- p = 24, q = 24,r = 36

- p = 18, q = 36,r = 24

- p = 36, q = 36,r = 36

The Correct Option is A

Solution and Explanation

Top Questions on Linear Algebra

- Consider the system of equations:

\[ x + 2y - z = 3 \\ 2x + 4y - 2z = 7 \\ 3x + 6y - 3z = 9 \]

Which of the following statements is true about the system?

- AP PGECET - 2025

- Mathematics

- Linear Algebra

- Consider a 2x2 matrix \(A = \begin{bmatrix} a & b \\ c & d \end{bmatrix}\). If \(a+d=1\) and \(ad-bc=1\), then \(A^3\) is equal to

- CUET (PG) - 2025

- Statistics

- Linear Algebra

- The system of equations given by \( \begin{bmatrix} 1 & 1 & 1 & : & 3 \\ 0 & -2 & -2 & : & 4 \\ 1 & -5 & 0 & : & 5 \end{bmatrix} \) has the solution:

- CUET (PG) - 2025

- Statistics

- Linear Algebra

- A is a, \(n \times n\) matrix of real numbers and \(A^3 - 3A^2 + 4A - 6I = 0\), where I is a, \(n \times n\) unit matrix. If \(A^{-1}\) exists, then

- CUET (PG) - 2025

- Statistics

- Linear Algebra

- Let P and Q be two square matrices such that PQ = I, where I is an identity matrix. Then zero is an eigen value of

- CUET (PG) - 2025

- Statistics

- Linear Algebra

Questions Asked in GATE AR exam

- A final year student appears for placement interview in two companies, S and T. Based on her interview performance, she estimates the probability of receiving job offers from companies S and T to be 0.8 and 0.6, respectively. Let \( p \) be the probability that she receives job offers from both the companies. Select the most appropriate option.

- GATE AR - 2025

- Probability

P and Q play chess frequently against each other. Of these matches, P has won 80% of the matches, drawn 15% of the matches, and lost 5% of the matches.

If they play 3 more matches, what is the probability of P winning exactly 2 of these 3 matches?In a regular semi-circular arch of 2 m clear span, the thickness of the arch is 30 cm and the breadth of the wall is 40 cm. The total quantity of brickwork in the arch is _______ m\(^3\). (rounded off to two decimal places)

- GATE AR - 2025

- Architecture

- Two cars, P and Q, start from a point X in India at 10 AM. Car P travels North with a speed of 25 km/h and car Q travels East with a speed of 30 km/h. Car P travels continuously but car Q stops for some time after traveling for one hour. If both cars are at the same distance from X at 11:30 AM, for how long (in minutes) did car Q stop?

- GATE AR - 2025

- GATE ME - 2025

- GATE PE - 2025

- Direction sense

Identify the option that has the most appropriate sequence such that a coherent paragraph is formed:

Statement:

P. At once, without thinking much, people rushed towards the city in hordes with the sole aim of grabbing as much gold as they could.

Q. However, little did they realize about the impending hardships they would have to face on their way to the city: miles of mud, unfriendly forests, hungry beasts, and inimical local lords—all of which would reduce their chances of getting gold to almost zero.

R. All of them thought that easily they could lay their hands on gold and become wealthy overnight.

S. About a hundred years ago, the news that gold had been discovered in Kolar spread like wildfire and the whole State was in raptures.